Key Takeaways

- AI-generated photographs are tougher to identify

- AI detection instruments exist, however are underused

- Artists’ participation is essential in stopping misuse

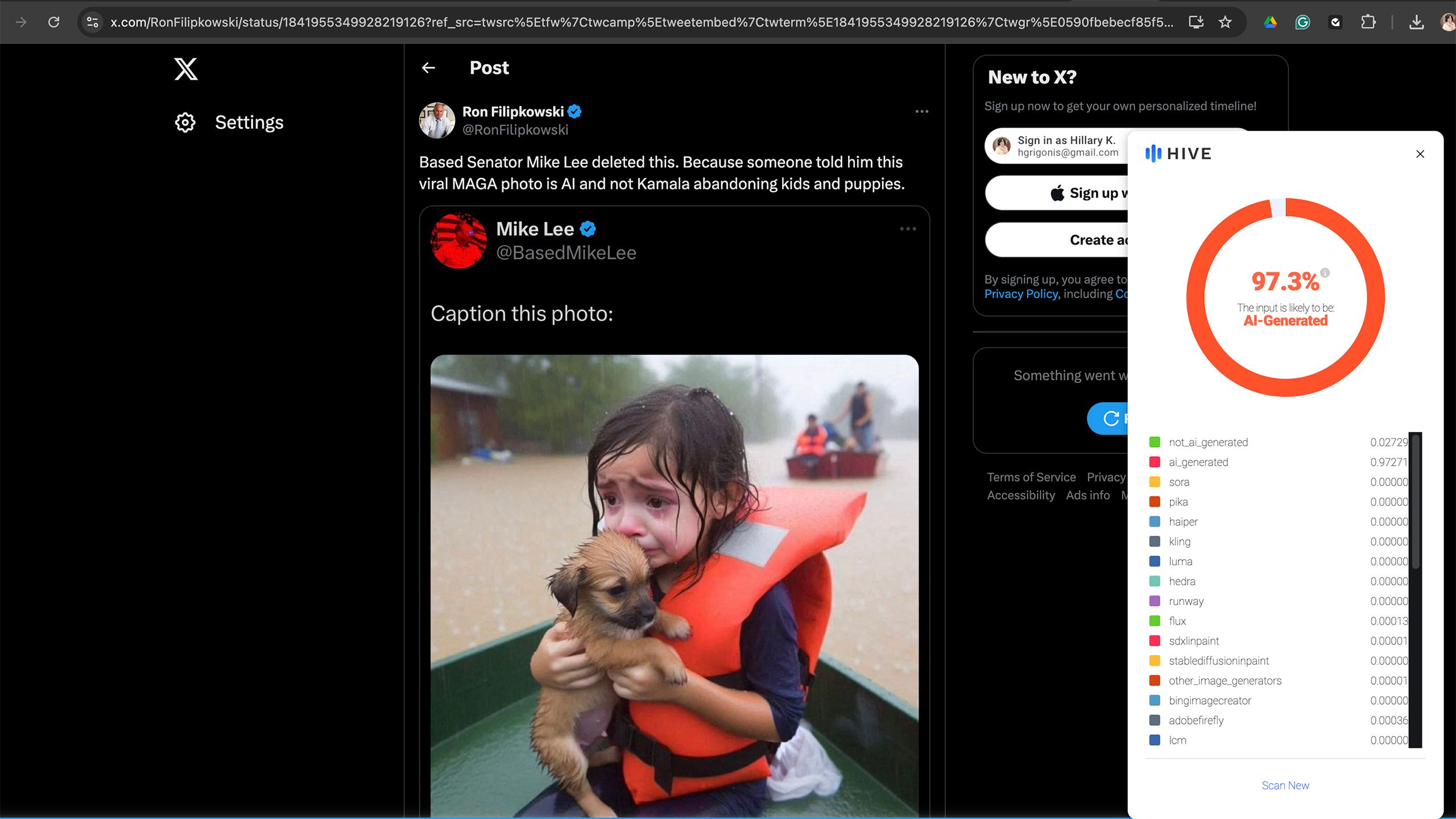

Within the wake of the devastation attributable to

Hurricane Helene

, a picture depicting somewhat lady crying whereas clinging to a pet on a ship in a flooded avenue went viral as an outline of the storm’s devastation. The issue? The lady (and her pet) don’t truly exist. The picture is considered one of many

AI-generated depictions

flooding social media within the aftermath of the storms. The picture brings up a key situation within the age of AI: is rising quicker than the expertise used to flag and label such photographs.

Several politicians shared the non-existent girl and her puppy on social media in criticism of the present administration and but, that misappropriated use of AI is among the extra innocuous examples. In any case, as the deadliest hurricane in the U.S. since 2017, Helene’s destruction has been photographed by many precise photojournalists, from striking images of families fleeing in floodwaters to a tipped American flag underwater.

However, AI photographs meant to create misinformation are readily changing into a problem. A study published earlier this year by Google, Duke College, and a number of fact-checking organizations discovered that AI didn’t account for a lot of pretend information photographs till 2023 however now take up a “sizable fraction of all misinformation-associated images.” From the pope sporting a puffer jacket to an imaginary lady fleeing a hurricane, AI is an more and more straightforward option to create false photographs and video to assist in perpetuating misinformation.

Utilizing expertise to battle expertise is essential to recognizing and in the end stopping synthetic imagery from attaining viral standing. The difficulty is that the technological safeguards are rising at a a lot slower tempo than AI itself. Fb, for instance, labels AI content material constructed utilizing Meta AI in addition to when it detects content material generated from outdoors platforms. However, the tiny label is way from foolproof and doesn’t work on all forms of AI-generated content material. The Content material Authenticity Initiative, a company that features many leaders within the business together with Adobe, is growing promising tech that would go away the creator’s data intact even in a screenshot. Nevertheless, the Initiative was organized in 2019 and lots of the instruments are nonetheless in beta and require the creator to take part.

The picture brings up a key situation within the age of AI:

Generative AI

is rising quicker than the expertise used to flag and label such photographs.

Associated

Some Apple Intelligence features may not arrive until March 2025

The primary Apple Intelligence options are coming however among the finest ones may nonetheless be months away.

AI-generated photographs have gotten tougher to acknowledge as such

The higher generative AI turns into, the tougher it’s to identify a pretend

I first noticed the hurricane lady in my Fb information feed, and whereas Meta is placing in a higher effort to label AI than X, which permits customers to generate photographs of recognizable political figures, the picture didn’t include a warning label. X later noted the photo as AI in a group remark. Nonetheless, I knew straight away that the picture was probably AI generated, as actual folks have pores, the place AI photographs nonetheless are likely to wrestle with issues like texture.

AI expertise is rapidly recognizing and compensating for its personal shortcomings, nonetheless. When

I tried X’s Grok 2

, I used to be startled at not simply the flexibility to generate recognizable folks, however that, in lots of instances, these “folks” have been so detailed that some even had pores and pores and skin texture. As generative AI advances, these synthetic graphics will solely turn out to be tougher to acknowledge.

Associated

Political deepfakes and 5 other shocking images X’s Grok AI shouldn’t be able to make

The previous Twitter’s new AI software is being criticized for lax restrictions.

Many social media customers do not take the time to vet the supply earlier than hitting that share button

Whereas AI detection instruments are arguably rising at a a lot slower charge, such instruments do exist. For instance, the Hive AI detector, a plugin that I’ve installed on Chrome on my laptop computer, acknowledged the hurricane lady as 97.3 p.c prone to be AI-generated. The difficulty is that these instruments take effort and time to make use of. A majority of social media searching is finished on smartphones relatively than laptops and desktops, and, even when I made a decision to make use of a cell browser relatively than the Fb app, Chrome doesn’t enable such plugins on its cell variant.

For AI detection instruments to take advantage of important impression, they have to be each embedded into the instruments shoppers already use and have widespread participation from the apps and platforms used most. If AI detection takes little to no effort, then I consider we may see extra widespread use. Fb is making an attempt with its AI label — although I do assume it must be way more noticeable and higher at detecting all forms of AI-generated content material.

The widespread participation will probably be the trickiest to realize. X, for instance, has prided itself on creating the Grok AI with a free ethical code. The platform that’s very probably attracting a big proportion of customers to its paid subscription for lax moral pointers resembling the flexibility to generate photographs of politicians and celebrities has little or no financial incentive to affix forces with these preventing towards the misuse of AI. Even AI platforms with restrictions in place aren’t foolproof, as a study from the Center for Countering Digital Hate was successful in bypassing these restrictions to create election-related photographs 41 p.c of the time utilizing Midjourney, ChatGPT Plus, Stability.ai DreamStudio and Microsoft Picture Creator.

If the AI corporations themselves labored to correctly label AI, then these safeguards may launch at a a lot quicker charge. This is applicable to not simply picture era, however textual content as effectively, as ChatGPT is engaged on a watermark as a option to help educators in recognizing college students that took AI shortcuts.

Associated

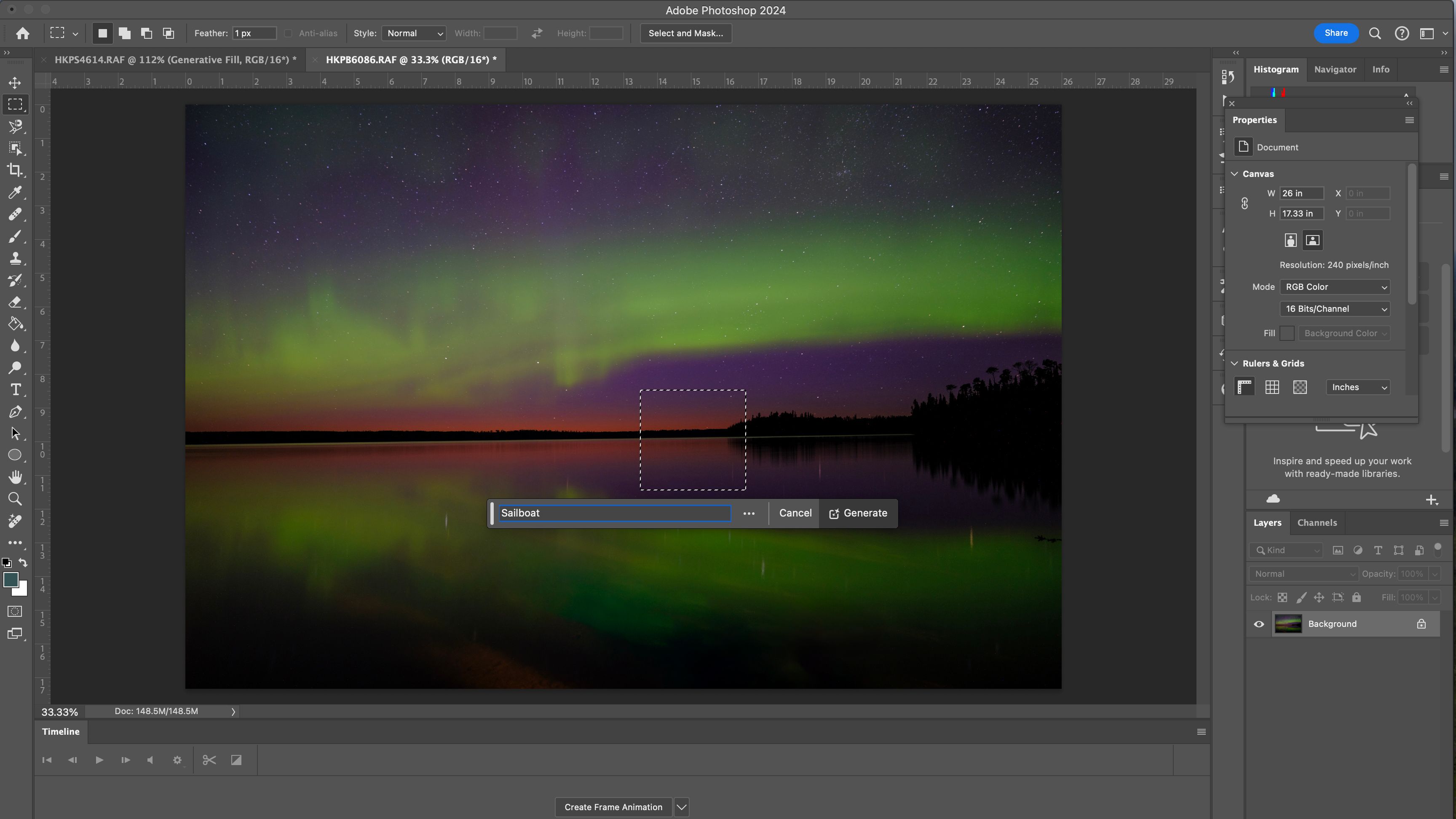

Adobe’s new AI tools will make your next creative project a breeze

At Adobe Max, the corporate introduced a number of new generative AI instruments for Photoshop and Premiere Professional.

Artist participation can be key

Correct attribution and AI-scraping prevention may assist incentivize artists to take part

Whereas the adoption of safeguards by AI corporations and social media platforms is important, the opposite piece of the equation is participation by the artists themselves. The Content material Authenticity Initiative is working to create a watermark that not solely retains the artist’s title and correct credit score intact, but additionally particulars if AI was used within the creation. Adobe’s Content material Credentials is a sophisticated, invisible watermark that labels who created a picture and whether or not or not AI or Photoshop was utilized in its creation. The info then could be learn by the Content Credentials Chrome extension, with a web app expected to launch next year. These Content material Credentials work even when somebody takes a screenshot of the picture, whereas Adobe can be engaged on utilizing this software to stop an artist’s work from getting used to coach AI.

Adobe says that it solely makes use of licensed content material from Adobe Inventory and the general public area to coach Firefly, however is constructing a software to dam different AI corporations from utilizing the picture as coaching.

The difficulty is twofold. First, whereas the Content material Authenticity Initiative was organized in 2019, Content material Credentials (the title for that digital watermark) continues to be in beta. As a photographer, I now have the flexibility to label my work with Content material Credentials in Photoshop, but the software continues to be in beta and the net software to learn such knowledge isn’t anticipated to roll out till 2025. Photoshop has examined various generative AI instruments and launched them into the fully-fledged model since, however Content material Credentials appear to be a slower rollout.

Second, content material credentials gained’t work if the artist doesn’t take part. At present, content material credentials are non-obligatory and artists can select whether or not or to not add this knowledge. The software’s capability to assist stop scarping the picture to be educated as AI and the flexibility to maintain the artist’s title hooked up to the picture are good incentives, however the software doesn’t but appear to be extensively used. If the artist doesn’t use content material credentials, then the detection software will merely present “no content material credentials discovered.” That doesn’t imply that the picture in query is AI, it merely signifies that the artist didn’t select to take part within the labeling function. For instance, I get the identical “no credentials” message when viewing the Hurricane Helene images taken by Related Press photographers as I do when viewing the viral AI-generated hurricane lady and her equally generated pet.

Whereas I do consider that the rollout of content material credentials is a snail’s tempo in comparison with the speedy deployment of AI, I nonetheless consider that it may very well be key to a future the place generated photographs are correctly labeled and simply acknowledged.

The safeguards to stop the misuse of generative AI are beginning to trickle out and present promise. However these techniques will have to be developed at a a lot wider tempo, adopted by a wider vary of artists and expertise corporations, and developed in a approach that makes them straightforward for anybody to make use of in an effort to make the largest impression within the AI period.

Associated

I asked Spotify AI to give me a Halloween party playlist. Here’s how it went

Spotify AI cooked up a creepy Halloween playlist for me.

Trending Merchandise

Lenovo Newest 15.6″ Laptop, Intel Pentium 4-core Processor, 15.6″ FHD Anti-Glare Display, Ethernet Port, HDMI, USB-C, WiFi & Bluetooth, Webcam (Windows 11 Home, 40GB RAM | 1TB SSD)

Thermaltake V250 Motherboard Sync ARGB ATX Mid-Tower Chassis with 3 120mm 5V Addressable RGB Fan + 1 Black 120mm Rear Fan Pre-Put in CA-1Q5-00M1WN-00

Sceptre Curved 24-inch Gaming Monitor 1080p R1500 98% sRGB HDMI x2 VGA Construct-in Audio system, VESA Wall Mount Machine Black (C248W-1920RN Sequence)

HP 27h Full HD Monitor – Diagonal – IPS Panel & 75Hz Refresh Fee – Clean Display – 3-Sided Micro-Edge Bezel – 100mm Top/Tilt Modify – Constructed-in Twin Audio system – for Hybrid Staff,black

Wireless Keyboard and Mouse Combo – Full-Sized Ergonomic Keyboard with Wrist Rest, Phone Holder, Sleep Mode, Silent 2.4GHz Cordless Keyboard Mouse Combo for Computer, Laptop, PC, Mac, Windows -Trueque

ASUS 27 Inch Monitor – 1080P, IPS, Full HD, Frameless, 100Hz, 1ms, Adaptive-Sync, for Working and Gaming, Low Blue Light, Flicker Free, HDMI, VESA Mountable, Tilt – VA27EHF,Black